We address the problem of the interaction (see below) between humans and groups of robots whose local synergy is exploited to accomplish complex tasks. Multi-robot systems possess several advantages w.r.t. single robots, e.g., higher performance in simultaneous spatial domain coverage, better affordability as compared to a single/bulky system, robustness against single point failures.

As multi-robot platform, we considered the case of a group of Unmanned Aerial Vehicles (UAVs), because of their high motion flexibility and potential pervasivity in dangerous or unaccessible locations. We envision a scenario where the UAVs possess some level of local autonomy and act as a group, e.g., by maintaining a desired formation, by avoiding obstacles, and by performing additional local tasks. At the same time, the remote human operator is in control of the overall UAV motion and receives, through haptic feedback, suitable cues informative enough of the remote UAV/environment state. We addressed two distinct possibilities for the human/multi-robot teleoperation: a top-down approach, and a bottom-up approach, mainly differing in the way the local robot interactions and desired formation shape are treated.

Top-down approach

In [1] the N UAVs are abstracted as 3-DOF first-order kinematic VPs (virtual points): the remote human user teleoperates a subset of these N VPs, while the real UAV's position tracks the trajectory of its own VP. The VPs collectively move as an N-nodes deformable flying object, whose shape (chosen beforehand) autonomously deforms, rotates and translates reacting to the presence of obstacles (to avoid them), and the operator commands (to follow them). The operator receives a haptic feedback informing him about the motion state of the real UAVs, and about the presence of obstacles via their collective effects on the VPs. Passivity theory is exploited to prove stability of the overall teleoperation system.

The figure shows an example of 8 UAVs arranged in a cubic shape controlled with an haptic device.

Bottom-up approach

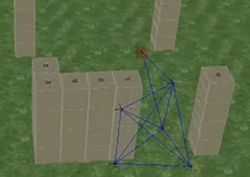

In [2] the N UAVs are abstracted as 3-DOF second-order VPs: the remote human user teleoperates a single leader, while the remaining followers motion is determined by local interactions (modeled as spring/damper couplings) among themselves and the leader, and repulsive interactions with the obstacles. The overall formation shape is not chosen beforehand but is a result of the UAVs motion. Split and rejoin decisions are allowed depending on any criterion, e.g., the UAVs relative distance and their relative visibility (i.e., when two UAVs are not close enough or obstructed by an obstacle, they split their visco-elastic coupling). The operator receives a haptic feedback informing him about the motion state of the leader which is also influenced by the motion of its followers and their interaction with the obstacles. Passivity theory is exploited to prove stability of the overall teleoperation system.

The figure shows an example of 6 UAVs among 4 obstacles and relative connectivity graph. The leader UAV is surrounded by a transparent red sphere.

Human-robot interaction

Human-robot interaction is a very active research area which spans a big variety of topics. A nonexhaustive list includes robot mechanical design and low-level control, higher-level control and planning, learning approaches, cognitive and/or physical interaction, and human intention/emotion interpretation. These efforts are guided by the accepted vision that in the future humans and robots will seamlessly cooperate in shared or remote spaces, thus becoming an integral part of our daily life. For instance, robots are expected to relieve us from monotonous and physically demanding work in industrial settings, or help humans in dealing with complex/dangerous tasks, thus augmenting their capabilities.

- Coexisting interaction: the robots share the environment with humans not directly involved in their task. Examples are: housekeeping, city cleaning, or navigation in crowded areas. In these scenarios, the robots should minimize the interferences with any human activity, but also be prepared to successfully solve unexpected conflicts with humans;

- Conditional interaction: the robots need to be guided/helped by expert human operators in tasks which are either too sensitive or hard to be solved given the existing ethical/technological situation. Examples are: cooperation with a surgeon in medical operations, or with firefighters in search and rescue tasks. In these scenarios, the robots should perfectly complete the human skills/roles in order to maximize the probability of task achievement as in, e.g., telepresence applications where the human operator capabilities are mediated/magnified by the remote robots;

- Essential interaction: the robots are asked to accomplish tasks in which the humans take a passive, but essential, role. Examples are: actively assisting patients in a medical operation, or passengers during their transportation. In these scenarios, the robots should be able to interpret the actions of humans who are assumed as being not specifically trained in interacting with robots.

In all interaction cases (which may also happen simultaneously), it is interesting to study what is the best level of autonomy expected in the robots, and what is the best sensory feedback needed by the humans to take an effective role in the interaction.

Credits

This page is an excerpt of [3].

References

- , “Haptic Teleoperation of Multiple Unmanned Aerial Vehicles over the Internet”, in 2011 IEEE Int. Conf. on Robotics and Automation, Shanghai, China, 2011, pp. 1341-1347.

- , “A Passivity-Based Decentralized Approach for the Bilateral Teleoperation of a Group of UAVs with Switching Topology”, in 2011 IEEE Int. Conf. on Robotics and Automation, Shanghai, China, 2011, pp. 898-905.

- , “Towards Bilateral Teleoperation of Multi-Robot Systems”, 3rd Int. Work. on Human-Friendly Robotics. Tuebingen, Germany, 2010.